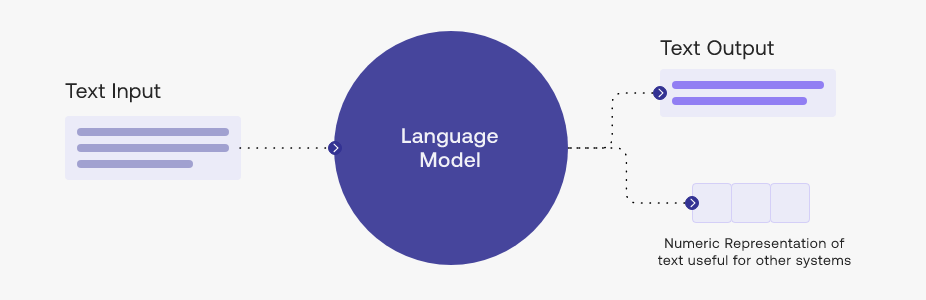

In the realm of artificntelial iligence, Large Language Models (LLMs) stand out as marvels of modern technology, capable of comprehending and generating human language with astonishing precision. These models, powered by sophisticated neural networks and transformer architectures, have revolutionized the way we interact with language and information. Let's embark on a beginner-friendly journey to unravel the inner workings of Large Language Models and uncover the secrets behind their linguistic prowess.

1. Neural Networks: The Building Blocks

Neural networks form the backbone of Large Language Models, mimicking the interconnected neurons in the human brain to process and analyze vast amounts of text data.

Through layers of neurons and complex mathematical operations, neural networks learn patterns and relationships within language, enabling LLMs to understand and generate text.

2. Transformer Architecture: Harnessing Attention

The transformer model, a key component of LLMs, utilizes an attention mechanism to capture dependencies between words in a sentence, regardless of their position.

This attention mechanism allows LLMs to grasp context and relationships within language more effectively, leading to improved text generation and comprehension.

3. Pre-training and Fine-tuning: The Training Journey

LLMs undergo two crucial stages of training: pre-training and fine-tuning.

During pre-training, the model is exposed to a diverse range of text data to learn general language patterns and structures.

Fine-tuning involves further training on specific tasks or domains, allowing the model to adapt and specialize for tasks like translation, summarization, or question answering.

4. Autoregressive Generation: Crafting Text

Large Language Models excel at autoregressive generation, predicting the next word in a sequence based on preceding words.

By iteratively generating text in this manner, LLMs produce coherent and contextually relevant language, showcasing their fluency and creativity.

5. Challenges and Limitations: Navigating the Terrain

LLMs are not immune to challenges, including biases inherited from training data and the occasional generation of inaccurate or "hallucinated" content.

While LLMs excel at language tasks, they lack true understanding and reasoning capabilities, relying on statistical correlations rather than deep comprehension.

Summary:

In essence, Large Language Models represent a groundbreaking advancement in natural language processing, offering a glimpse into the potential and complexities of AI-driven language understanding. By delving into the intricacies of neural networks, transformer architectures, training processes, and text generation mechanisms, we gain a deeper appreciation for the capabilities and challenges of these remarkable AI systems. As research in this field continues to evolve, the future holds exciting possibilities for the further development and application of Large Language Models in diverse domains.